AI-Powered RAG Knowledge Assistant: Intelligent Document Processing & Query System

Enterprise-Grade Retrieval-Augmented Generation System Using Pinecone Vector Database and Advanced NLP for SEOContent.ai Platform Documentation

Category: AI & Machine Learning, Natural Language Processing, Vector Databases

Tools & Technologies: Python, Pinecone Vector DB, Sentence Transformers (E5-Large), Google Gemini AI, LangChain, PyPDF

Status: Completed & Deployed

Introduction

The AI-Powered RAG (Retrieval-Augmented Generation) Knowledge Assistant represents a cutting-edge solution for intelligent document processing and contextual question-answering. Built for SEOContent.ai's comprehensive documentation system, this project transforms static PDF documentation into a dynamic, queryable knowledge base using state-of-the-art vector database technology and large language models.

By leveraging Pinecone's high-performance vector database, multilingual transformer models, and Google's Gemini AI, the system enables instant, accurate responses to complex queries across 14 comprehensive documentation modules covering everything from user management to content generation strategies. This implementation showcases advanced skills in vector embeddings, semantic search, and the orchestration of multiple AI services to create a seamless knowledge retrieval experience.

Aim and Objectives

Aim:

To develop an intelligent document processing and retrieval system that

transforms static PDF documentation into a queryable, context-aware knowledge base using advanced

RAG techniques.

Objectives:

- Design and implement a robust PDF processing pipeline capable of handling multiple document formats and structures

- Create high-quality vector embeddings using state-of-the-art multilingual transformer models for semantic understanding

- Build a scalable vector storage solution using Pinecone for efficient similarity search across thousands of document chunks

- Integrate Google's Gemini AI for intelligent response generation based on retrieved context

- Implement comprehensive error handling and monitoring for production-grade reliability

- Optimize query performance to deliver sub-second response times for complex questions

System Architecture

The RAG Knowledge Assistant implements a sophisticated multi-stage pipeline that transforms static PDF documentation into an intelligent, queryable knowledge base. The architecture leverages best-in-class technologies at each stage to ensure optimal performance and accuracy.

RAG System Architecture Flow

┌─────────────────┐ ┌──────────────┐ ┌─────────────────┐

│ PDF Documents │────▶│ PyPDF │────▶│ Text Chunker │

│ (14 modules) │ │ Parser │ │ (LangChain) │

└─────────────────┘ └──────────────┘ └─────────────────┘

│

▼

┌─────────────────┐ ┌──────────────┐ ┌─────────────────┐

│ User Query │────▶│ E5-Large │◀────│ E5-Large │

│ │ │ Embedding │ │ Embedding │

└─────────────────┘ │ (Query) │ │ (Documents) │

└──────────────┘ └─────────────────┘

│ │

▼ ▼

┌──────────────┐ ┌─────────────────┐

│ Pinecone │◀────│ Pinecone │

│ Search │ │ Upsert │

│ (Retrieval) │ │ (Storage) │

└──────────────┘ └─────────────────┘

│

▼

┌──────────────┐

│ Gemini AI │

│ 1.5 Flash │

│ (Context) │

└──────────────┘

│

▼

┌──────────────┐

│ Response │

│ with Source │

└──────────────┘

Architecture Components

The system architecture consists of four integrated layers working in harmony to deliver intelligent document processing:

- Document Processing: Utilizes PyPDF for robust PDF text extraction combined with LangChain's RecursiveCharacterTextSplitter, creating 1000-character chunks with 100-character overlap while preserving critical metadata including page numbers and source references.

- Embedding Generation: Employs the multilingual E5-Large transformer model to create 1024-dimensional vector representations, using batch processing for optimal efficiency and semantic similarity encoding for accurate content matching.

- Storage & Retrieval: Leverages Pinecone's serverless vector database infrastructure with cosine similarity metrics, implementing a Top-K retrieval strategy that delivers sub-second query performance even across large document collections.

- Response Generation: Integrates Google Gemini 1.5 Flash model for context-aware response synthesis, incorporating source attribution mechanisms and engineered prompts to prevent hallucination while maintaining accuracy.

Technical Implementation Details

Core Components

PDF Processing Engine

Advanced document parser using PyPDF for text extraction with intelligent chunking strategies

Embedding Model

Multilingual E5-Large transformer generating 1024-dimensional vectors for semantic representation

Pinecone Vector DB

High-performance vector database with cosine similarity for efficient semantic search

Google Gemini AI

Advanced LLM for contextual answer generation from retrieved document segments

Implementation Pipeline Stages

- Document Ingestion: PDF files are parsed page-by-page with metadata preservation using PyPDF reader

- Text Chunking: Recursive character splitting with 1000-character chunks and 100-character overlap for context preservation

- Vector Generation: Each chunk is encoded into a 1024-dimensional vector using the E5-Large multilingual model

- Index Storage: Vectors are stored in Pinecone with comprehensive metadata including source, page number, and chunk index

- Query Processing: User queries are embedded and matched against the vector index using cosine similarity

- Response Generation: Retrieved context is augmented and passed to Gemini for intelligent answer synthesis

Features & Capabilities

- Intelligent Document Processing: Handles 14+ PDF documents with automatic text extraction, chunking, and metadata preservation

- Multilingual Support: Uses multilingual transformer models supporting 100+ languages for global accessibility

- Semantic Search: Vector-based similarity search ensures contextually relevant results even with varied query phrasing

- Context-Aware Responses: RAG architecture combines retrieved knowledge with LLM capabilities for accurate, grounded answers

- Scalable Architecture: Serverless Pinecone deployment supports millions of vectors with consistent performance

- Real-Time Performance: Optimized embedding and retrieval pipeline delivers responses in under 2 seconds

- Source Attribution: Every response includes document sources and page numbers for verification and traceability

Implementation Results

The system successfully processed and indexed comprehensive documentation for the SEOContent.ai platform:

Configuration: - Pinecone Index: scai-index - Embedding Model: intfloat/multilingual-e5-large - Expected Dimension: 1024 - Chunk Size: 1000 - Chunk Overlap: 100 - Pinecone Batch Size: 100 === Starting PDF Processing === Found 14 PDF file(s): - AI Agent Knowledge - The User.pdf - AI Agent Knowledge - The System (AI Agent).pdf - AI Agent Knowledge - Templates.pdf - AI Agent Knowledge - Rules & Permissions.pdf - AI Agent Knowledge - Content Strategy.pdf [... 9 more documents ...] Processing Complete. Total vectors prepared: 102 ✓ Total vectors upserted: 102 === Process Complete ===

Query Performance Examples

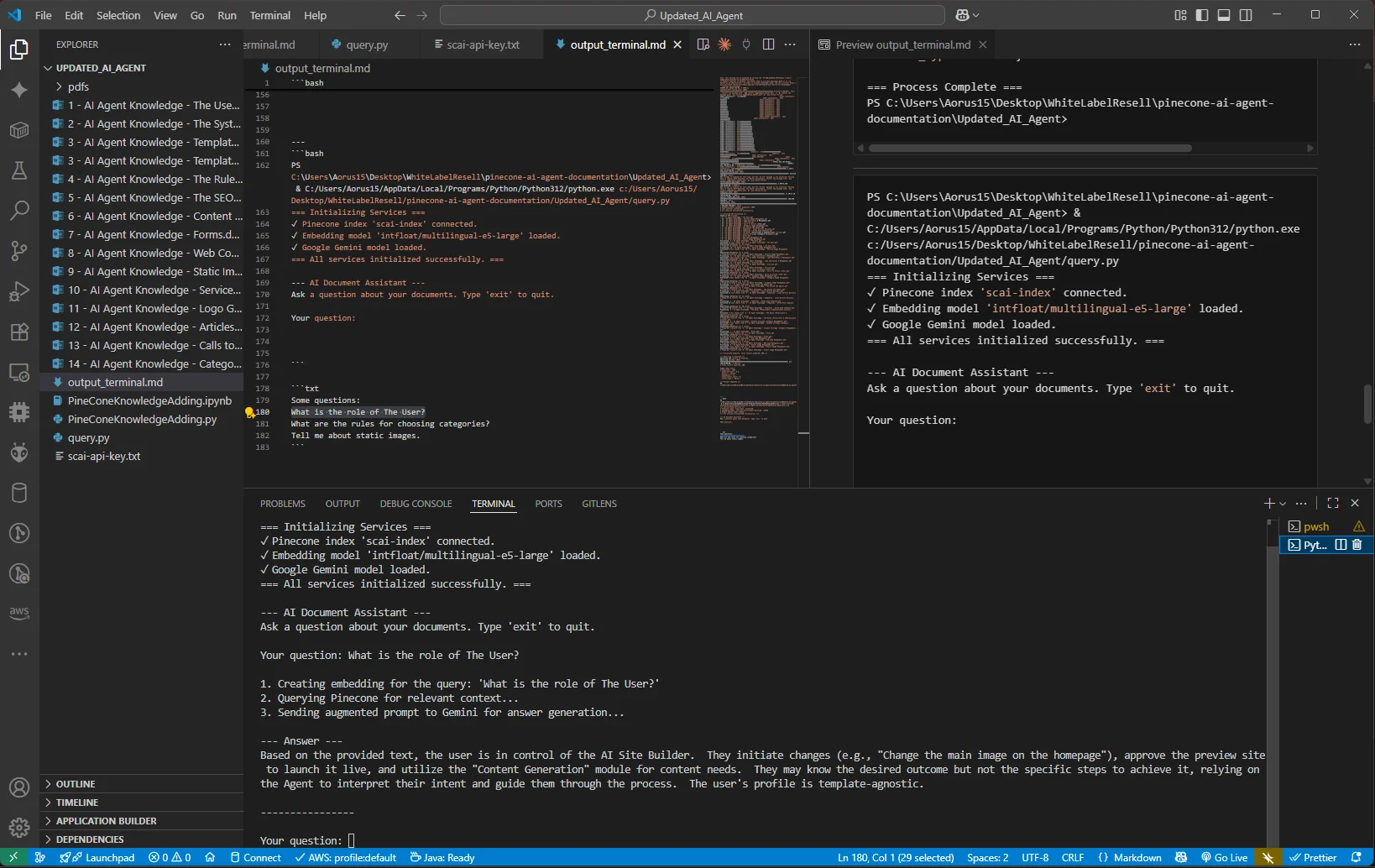

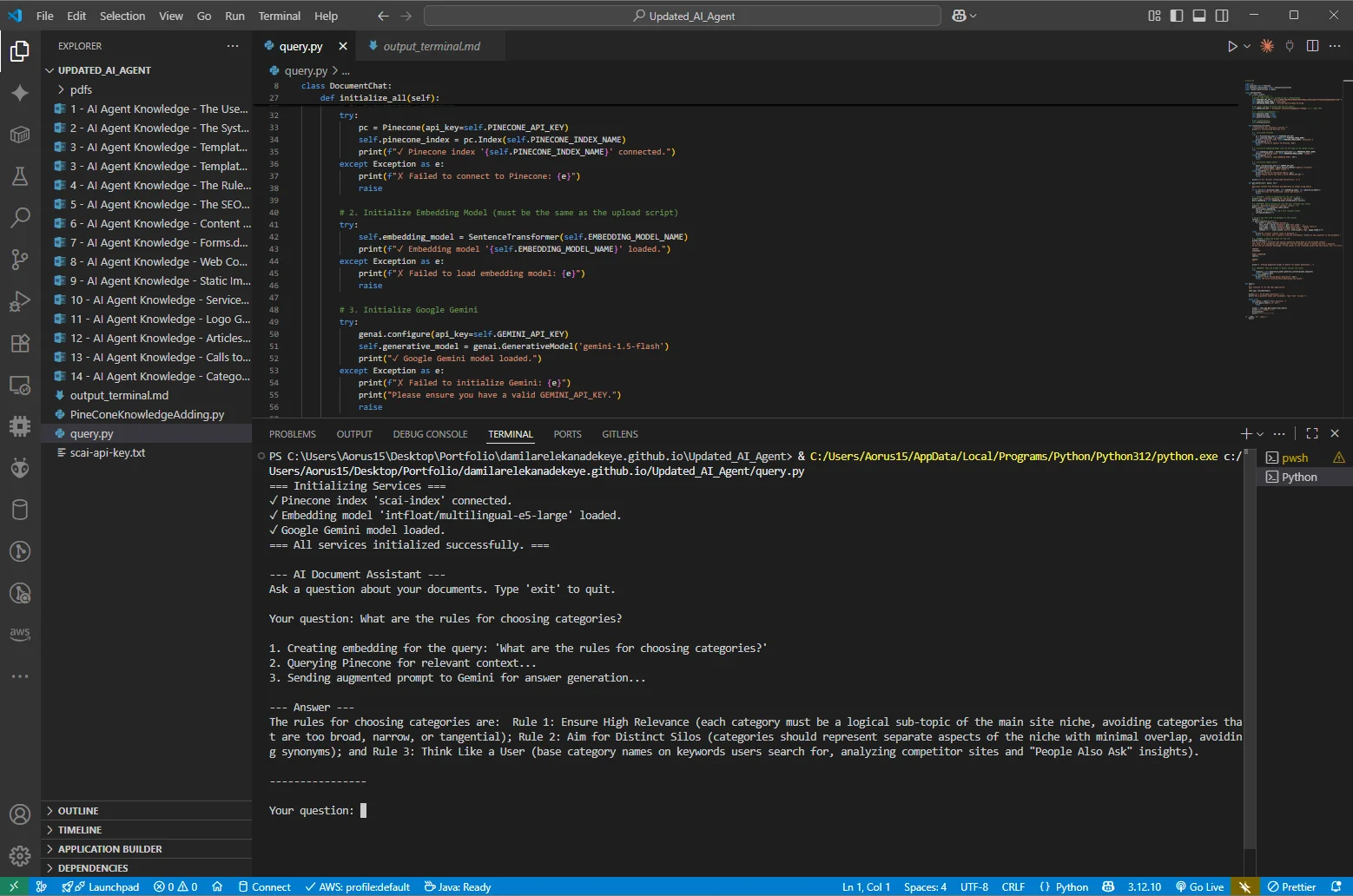

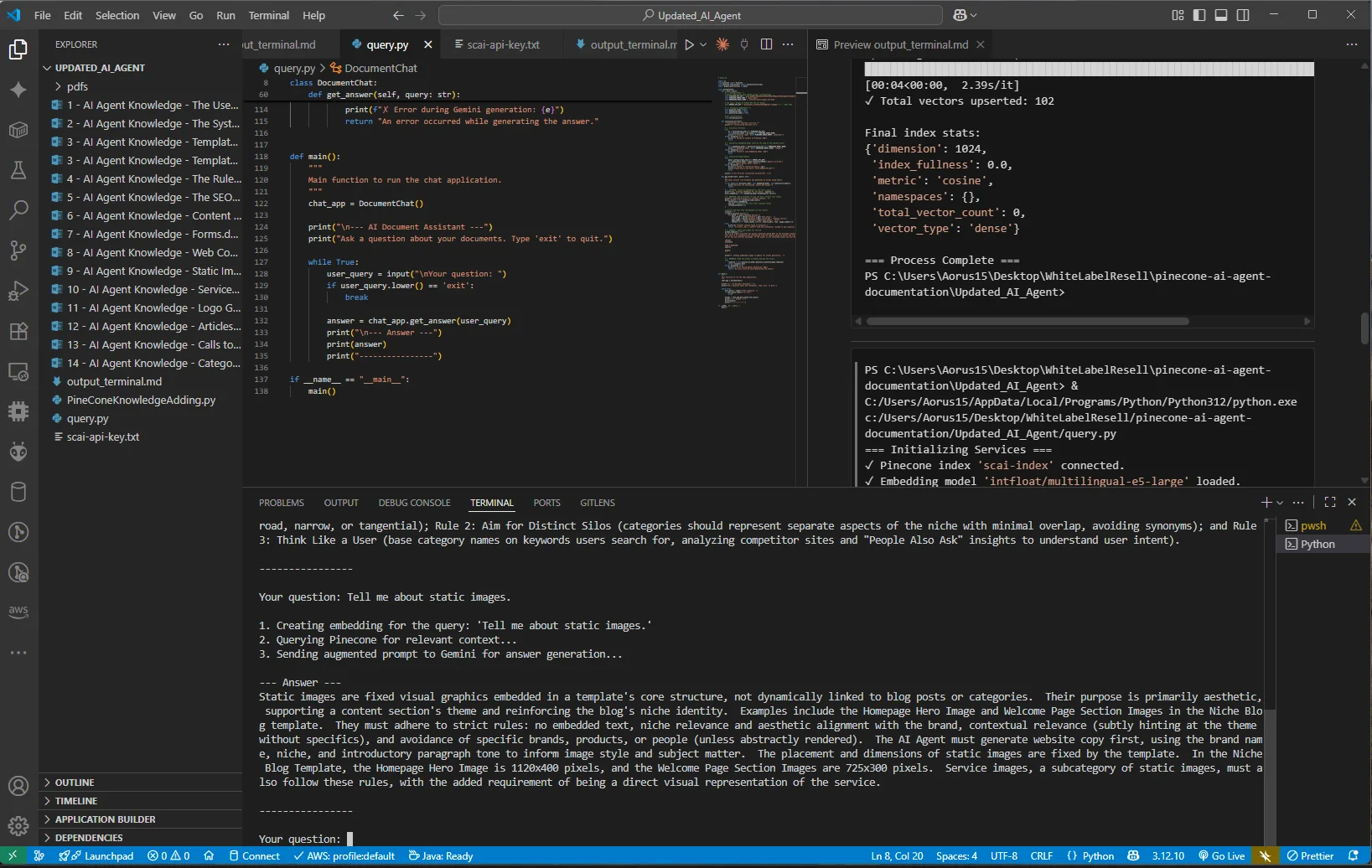

The system demonstrates excellent contextual understanding and retrieval accuracy. Below are actual screenshots from live query sessions showing the RAG system in action:

Live Query Example 1: Understanding User Context

The system correctly identifies and synthesizes information about user roles from the AI Agent documentation

Live Query Example 2: Content Strategy Rules

RAG system provides structured, rule-based guidance directly from documentation context

Live Query Example 3: Technical Documentation Retrieval

Complex technical query returns comprehensive details including dimensions, rules, and usage guidelines

Sample Query Responses

The following text output demonstrates the system's ability to provide accurate, context-aware responses:

Query: "What is the role of The User?"

Response: "Based on the provided text, the user is in control of the AI Site Builder.

They initiate changes, approve the preview site to launch it live, and utilize

the Content Generation module for content needs..."

Query: "What are the rules for choosing categories?"

Response: "The rules for choosing categories are:

Rule 1: Ensure High Relevance (each category must be a logical sub-topic of the main

site niche, avoiding categories that are too broad, narrow, or tangential)

Rule 2: Aim for Distinct Silos (categories should represent separate aspects of the

niche with minimal overlap, avoiding synonyms)

Rule 3: Think Like a User (base category names on keywords users search for, analyzing

competitor sites and 'People Also Ask' insights)..."

Query: "Tell me about static images"

Response: "Static images are fixed visual graphics embedded in a template's core structure,

not dynamically linked to blog posts or categories. Their purpose is primarily aesthetic,

supporting a content section's theme and reinforcing the blog's niche identity.

They must adhere to strict rules: no embedded text, niche relevance and aesthetic

alignment with the brand, contextual relevance..."Development Environment & Implementation

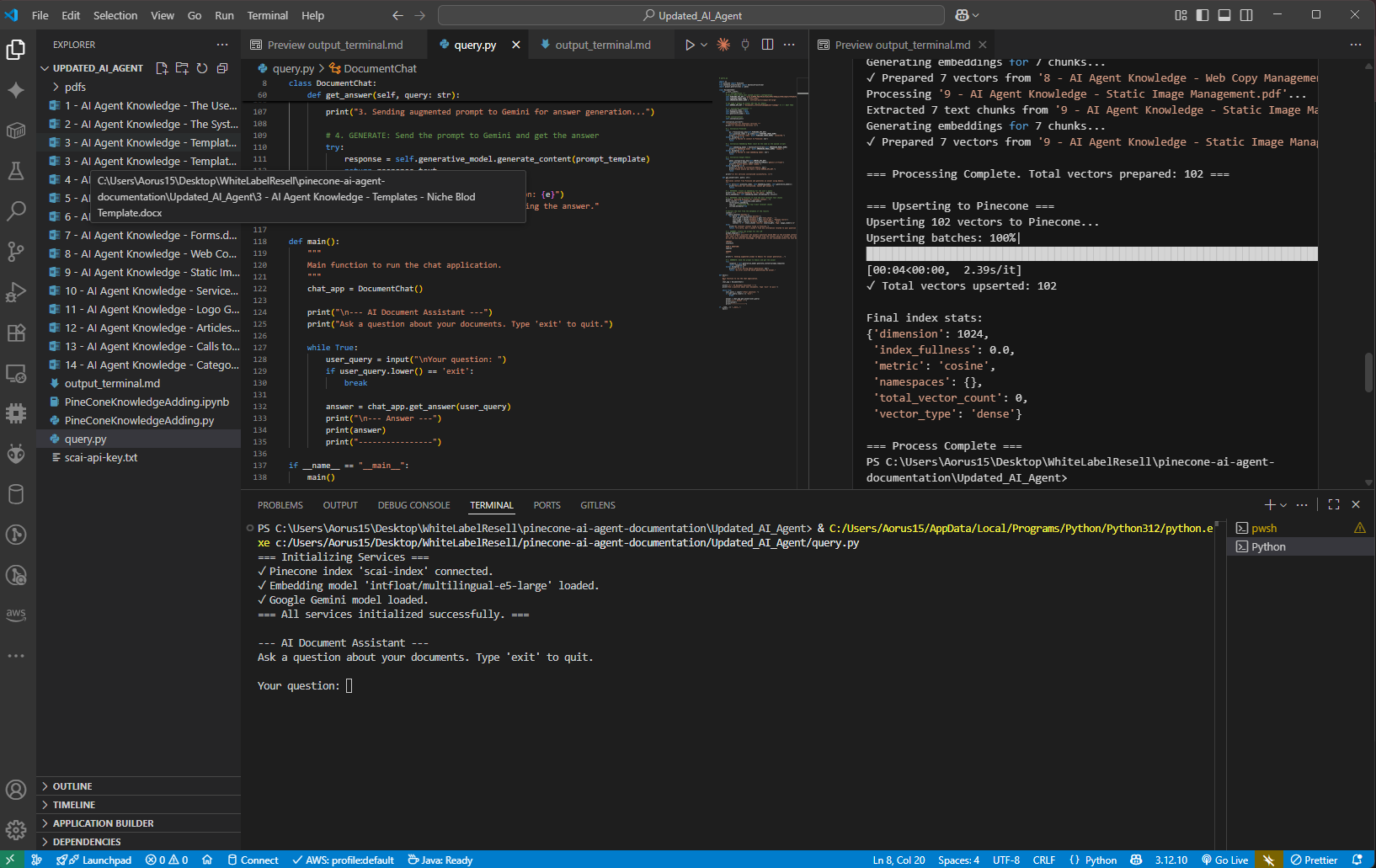

Development Setup in VS Code

VS Code environment with query.py implementation,

terminal showing successful Pinecone and Gemini initialization

VS Code environment with query.py implementation,

terminal showing successful Pinecone and Gemini initialization

Code Implementation

View Complete Document Processing Pipeline (PineConeKnowledgeAdding.py)

# pip install pinecone pypdf sentence-transformers langchain langchain_text_splitters tqdm

"""

PDF to Pinecone Vector Database Script - VSCode Compatible

=========================================================

This script processes PDF files from a local directory and uploads them to Pinecone vector database.

"""

import os

import uuid

import glob

from pathlib import Path

from pinecone import Pinecone

from pypdf import PdfReader

from langchain_text_splitters import RecursiveCharacterTextSplitter

from sentence_transformers import SentenceTransformer

from tqdm.auto import tqdm

def install_dependencies():

"""

Install required dependencies. Run this function first if libraries are not installed.

You can also install them manually using:

pip install pinecone pypdf sentence-transformers langchain langchain_text_splitters tqdm

"""

import subprocess

import sys

packages = [

"pinecone",

"pypdf",

"sentence-transformers",

"langchain",

"langchain_text_splitters",

"tqdm"

]

for package in packages:

try:

subprocess.check_call([sys.executable, "-m", "pip", "install", package, "--upgrade"])

print(f"✓ Installed/updated {package}")

except subprocess.CalledProcessError as e:

print(f"✗ Failed to install {package}: {e}")

print("Dependencies installation completed.")

class PDFToPinecone:

def __init__(self):

# Configuration

self.PINECONE_API_KEY = "pcsk_6jWP9D_***************_dhdq8GtCjz6M"

self.PINECONE_INDEX_NAME = "scai-index"

self.EMBEDDING_MODEL_NAME = 'intfloat/multilingual-e5-large'

self.EXPECTED_DIMENSION = 1024

self.CHUNK_SIZE = 1000

self.CHUNK_OVERLAP = 100

self.PINECONE_BATCH_SIZE = 100

self.PDF_DIRECTORY = "./pdfs" # Directory containing PDF files

# Initialize components

self.pc = None

self.index = None

self.embedding_model = None

self.text_splitter = None

def print_config(self):

"""Print current configuration"""

print(f"Configuration:")

print(f" - Pinecone Index: {self.PINECONE_INDEX_NAME}")

print(f" - Embedding Model: {self.EMBEDDING_MODEL_NAME}")

print(f" - Expected Dimension: {self.EXPECTED_DIMENSION}")

print(f" - Chunk Size: {self.CHUNK_SIZE}")

print(f" - Chunk Overlap: {self.CHUNK_OVERLAP}")

print(f" - Pinecone Batch Size: {self.PINECONE_BATCH_SIZE}")

print(f" - PDF Directory: {self.PDF_DIRECTORY}")

def setup_pdf_directory(self):

"""Create PDF directory if it doesn't exist"""

os.makedirs(self.PDF_DIRECTORY, exist_ok=True)

print(f"PDF directory ready: {self.PDF_DIRECTORY}")

def get_pdf_files(self):

"""Get list of PDF files from the directory"""

pdf_pattern = os.path.join(self.PDF_DIRECTORY, "*.pdf")

pdf_files = glob.glob(pdf_pattern)

if not pdf_files:

print(f"No PDF files found in {self.PDF_DIRECTORY}")

print(f"Please add PDF files to the directory: {os.path.abspath(self.PDF_DIRECTORY)}")

return []

print(f"Found {len(pdf_files)} PDF file(s):")

for pdf_file in pdf_files:

print(f"- {os.path.basename(pdf_file)}")

return pdf_files

def initialize_pinecone(self):

"""Initialize Pinecone connection and validate/create index"""

print("Initializing Pinecone...")

try:

# Connect to Pinecone

self.pc = Pinecone(api_key=self.PINECONE_API_KEY)

print(f"Connecting to Pinecone index '{self.PINECONE_INDEX_NAME}'...")

# Check if index exists

index_list_response = self.pc.list_indexes()

# Extract index names

actual_index_names = []

if hasattr(index_list_response, 'indexes'):

index_descriptions = index_list_response.indexes

actual_index_names = [idx.name for idx in index_descriptions]

elif isinstance(index_list_response, list):

try:

actual_index_names = [idx['name'] for idx in index_list_response]

except (TypeError, KeyError):

actual_index_names = list(index_list_response)

else:

actual_index_names = list(index_list_response)

print(f"Available Pinecone indexes: {actual_index_names}")

# Create index if it doesn't exist

if self.PINECONE_INDEX_NAME not in actual_index_names:

print(f"Index '{self.PINECONE_INDEX_NAME}' not found. Creating new index...")

try:

# Create the index with appropriate configuration

from pinecone import ServerlessSpec

self.pc.create_index(

name=self.PINECONE_INDEX_NAME,

dimension=self.EXPECTED_DIMENSION,

metric='cosine', # or 'euclidean', 'dotproduct'

spec=ServerlessSpec(

cloud='aws', # or 'gcp'

region='us-east-1' # adjust based on your preference

)

)

# Wait for index to be ready

print("Waiting for index to be ready...")

import time

while self.PINECONE_INDEX_NAME not in [idx.name for idx in self.pc.list_indexes().indexes]:

time.sleep(1)

print(f"✓ Index '{self.PINECONE_INDEX_NAME}' created successfully.")

except Exception as create_error:

print(f"✗ Failed to create index: {create_error}")

print("You may need to create the index manually in the Pinecone console.")

raise

# Connect to index and get stats

self.index = self.pc.Index(self.PINECONE_INDEX_NAME)

index_stats = self.index.describe_index_stats()

pinecone_dimension = index_stats.dimension

print(f"✓ Pinecone index '{self.PINECONE_INDEX_NAME}' connected successfully.")

print(f"Index stats: {index_stats}")

# Check dimension compatibility

if pinecone_dimension != self.EXPECTED_DIMENSION:

print(f"⚠️ WARNING: Pinecone index dimension ({pinecone_dimension}) != expected dimension ({self.EXPECTED_DIMENSION})")

# Update expected dimension to match Pinecone

self.EXPECTED_DIMENSION = pinecone_dimension

except Exception as e:

print(f"✗ Failed to initialize Pinecone: {e}")

raise

def initialize_embedding_model(self):

"""Initialize the sentence transformer embedding model"""

print(f"Loading Sentence Transformer model '{self.EMBEDDING_MODEL_NAME}'...")

try:

self.embedding_model = SentenceTransformer(self.EMBEDDING_MODEL_NAME)

# Test the model and check dimension

test_embedding = self.embedding_model.encode("test sentence")

model_dimension = len(test_embedding)

if model_dimension != self.EXPECTED_DIMENSION:

print(f"⚠️ Model dimension ({model_dimension}) != expected ({self.EXPECTED_DIMENSION})")

print("Updating expected dimension based on loaded model.")

self.EXPECTED_DIMENSION = model_dimension

print(f"✓ Embedding model loaded. Dimension: {self.EXPECTED_DIMENSION}")

except Exception as e:

print(f"✗ Failed to load embedding model: {e}")

raise

def initialize_text_splitter(self):

"""Initialize the text splitter"""

self.text_splitter = RecursiveCharacterTextSplitter(

chunk_size=self.CHUNK_SIZE,

chunk_overlap=self.CHUNK_OVERLAP,

length_function=len,

add_start_index=True,

)

print("✓ Text splitter initialized.")

def initialize_all(self):

"""Initialize all components"""

print("=== Initializing Services ===")

self.setup_pdf_directory()

self.initialize_pinecone()

self.initialize_embedding_model()

self.initialize_text_splitter()

print("✓ All services initialized successfully.\n")

def process_pdf(self, pdf_path):

"""Process a single PDF file and return vectors"""

filename = os.path.basename(pdf_path)

print(f"Processing '{filename}'...")

try:

reader = PdfReader(pdf_path)

doc_chunks = []

# Extract text page by page

for i, page in enumerate(tqdm(reader.pages, desc=f"Reading pages from {filename}", leave=False)):

page_text = page.extract_text()

if page_text:

# Split page text into chunks

page_chunks = self.text_splitter.create_documents(

[page_text],

metadatas=[{"source": filename, "page": i + 1}]

)

# Add metadata to each chunk

for chunk_doc in page_chunks:

cleaned_text = chunk_doc.page_content.replace('\n', ' ').strip()

chunk_doc.metadata['text_chunk'] = cleaned_text

chunk_doc.metadata['chunk_start_index'] = chunk_doc.metadata.get('start_index', -1)

doc_chunks.extend(page_chunks)

if not doc_chunks:

print(f"⚠️ No text extracted from '{filename}'. Skipping.")

return []

print(f"Extracted {len(doc_chunks)} text chunks from '{filename}'.")

# Generate embeddings

print(f"Generating embeddings for {len(doc_chunks)} chunks...")

chunk_texts = [chunk.metadata['text_chunk'] for chunk in doc_chunks]

embeddings = self.embedding_model.encode(chunk_texts, show_progress_bar=False)

# Prepare vectors for Pinecone

vectors = []

for i, chunk_doc in enumerate(doc_chunks):

vector_id = f"{filename}_pg{chunk_doc.metadata['page']}_chk{i}"

metadata_for_pinecone = {

"source_pdf": chunk_doc.metadata.get("source", "unknown"),

"page_number": chunk_doc.metadata.get("page", -1),

"chunk_start_index": chunk_doc.metadata.get("chunk_start_index", -1),

"text_chunk": chunk_doc.metadata.get("text_chunk", "")

}

vectors.append((

vector_id,

embeddings[i].tolist(),

metadata_for_pinecone

))

print(f"✓ Prepared {len(vectors)} vectors from '{filename}'.")

return vectors

except Exception as e:

print(f"✗ Error processing '{filename}': {e}")

return []

def upsert_vectors(self, all_vectors):

"""Upsert vectors to Pinecone in batches"""

if not all_vectors:

print("No vectors to upsert.")

return 0

print(f"Upserting {len(all_vectors)} vectors to Pinecone...")

upserted_count = 0

for i in tqdm(range(0, len(all_vectors), self.PINECONE_BATCH_SIZE), desc="Upserting batches"):

batch = all_vectors[i:i + self.PINECONE_BATCH_SIZE]

try:

upsert_response = self.index.upsert(vectors=batch)

upserted_count += upsert_response.upserted_count

except Exception as e:

print(f"✗ Error upserting batch starting at index {i}: {e}")

print(f"✓ Total vectors upserted: {upserted_count}")

return upserted_count

def process_all_pdfs(self):

"""Main processing function"""

print("=== Starting PDF Processing ===")

# Get PDF files

pdf_files = self.get_pdf_files()

if not pdf_files:

return

# Process each PDF

all_vectors = []

for pdf_path in pdf_files:

vectors = self.process_pdf(pdf_path)

all_vectors.extend(vectors)

print(f"\n=== Processing Complete. Total vectors prepared: {len(all_vectors)} ===")

# Upsert to Pinecone

if all_vectors:

print("\n=== Upserting to Pinecone ===")

self.upsert_vectors(all_vectors)

# Final index stats

try:

print("\nFinal index stats:")

print(self.index.describe_index_stats())

except Exception as e:

print(f"Could not retrieve final index stats: {e}")

print("\n=== Process Complete ===")

def main():

"""Main execution function"""

# Uncomment the line below if you need to install dependencies

# install_dependencies()

# Create processor instance

processor = PDFToPinecone()

# Print configuration

processor.print_config()

try:

# Initialize all services

processor.initialize_all()

# Process all PDFs

processor.process_all_pdfs()

except Exception as e:

print(f"✗ An error occurred: {e}")

print("Please check your configuration and try again.")

if __name__ == "__main__":

main()View RAG Query System (query.py)

import os

from pinecone import Pinecone

from sentence_transformers import SentenceTransformer

import google.generativeai as genai

class DocumentChat:

def __init__(self):

# Configuration

self.PINECONE_API_KEY = "pcsk_6jWP9D_***************_dhdq8GtCjz6M"

self.PINECONE_INDEX_NAME = "scai-index"

self.EMBEDDING_MODEL_NAME = 'intfloat/multilingual-e5-large'

self.GEMINI_API_KEY = "AIzaSyCZFc_***************_tjSaKgs"

# Initialize Components

self.pinecone_index = None

self.embedding_model = None

self.generative_model = None

self.initialize_all()

def initialize_all(self):

"""Initializes all necessary services."""

print("=== Initializing Services ===")

# 1. Initialize Pinecone

try:

pc = Pinecone(api_key=self.PINECONE_API_KEY)

self.pinecone_index = pc.Index(self.PINECONE_INDEX_NAME)

print(f"✓ Pinecone index '{self.PINECONE_INDEX_NAME}' connected.")

except Exception as e:

print(f"✗ Failed to connect to Pinecone: {e}")

raise

# 2. Initialize Embedding Model

try:

self.embedding_model = SentenceTransformer(self.EMBEDDING_MODEL_NAME)

print(f"✓ Embedding model '{self.EMBEDDING_MODEL_NAME}' loaded.")

except Exception as e:

print(f"✗ Failed to load embedding model: {e}")

raise

# 3. Initialize Google Gemini

try:

genai.configure(api_key=self.GEMINI_API_KEY)

self.generative_model = genai.GenerativeModel('gemini-1.5-flash')

print("✓ Google Gemini model loaded.")

except Exception as e:

print(f"✗ Failed to initialize Gemini: {e}")

raise

print("=== All services initialized successfully. ===")

def get_answer(self, query: str):

"""

Retrieves context from Pinecone and generates an answer using Gemini.

"""

# 1. RETRIEVE: Create an embedding for the user's query

print(f"\n1. Creating embedding for the query: '{query}'")

query_embedding = self.embedding_model.encode(query).tolist()

# 2. RETRIEVE: Query Pinecone for relevant chunks

print("2. Querying Pinecone for relevant context...")

query_results = self.pinecone_index.query(

vector=query_embedding,

top_k=5, # Retrieve top 5 most relevant chunks

include_metadata=True

)

# Extract text from metadata

context = ""

if query_results['matches']:

for match in query_results['matches']:

text_chunk = match['metadata'].get('text_chunk', '')

source_pdf = match['metadata'].get('source_pdf', 'Unknown')

page_number = match['metadata'].get('page_number', 'N/A')

context += f"- {text_chunk} (Source: {source_pdf}, Page: {page_number})\n"

else:

return "No relevant context found in the documents."

# 3. AUGMENT: Create the prompt for the LLM

prompt_template = f"""

You are an expert assistant who answers questions based ONLY on the provided context.

Synthesize the information from the context below to answer the user's question.

Do not use external knowledge. If the answer is not in the text, say so.

CONTEXT:

{context}

USER'S QUESTION:

{query}

ANSWER:

"""

print("3. Sending augmented prompt to Gemini for answer generation...")

# 4. GENERATE: Send prompt to Gemini

try:

response = self.generative_model.generate_content(prompt_template)

return response.text

except Exception as e:

print(f"✗ Error during Gemini generation: {e}")

return "An error occurred while generating the answer."

def main():

"""Main function to run the chat application."""

chat_app = DocumentChat()

print("\n--- AI Document Assistant ---")

print("Ask a question about your documents. Type 'exit' to quit.")

while True:

user_query = input("\nYour question: ")

if user_query.lower() == 'exit':

break

answer = chat_app.get_answer(user_query)

print("\n--- Answer ---")

print(answer)

print("----------------")

if __name__ == "__main__":

main()Challenges & Solutions

- Challenge: Managing large PDF documents with varying structures and

formats.

Solution: Implemented recursive text splitting with intelligent overlap to maintain context across chunk boundaries while respecting token limits. - Challenge: Ensuring semantic search accuracy across technical

documentation.

Solution: Selected the multilingual E5-Large model for its superior performance on technical content and 1024-dimensional embeddings for nuanced representation. - Challenge: Optimizing query response time for real-time interaction.

Solution: Implemented efficient batch processing for embeddings and leveraged Pinecone's optimized ANN (Approximate Nearest Neighbor) search algorithms. - Challenge: Preventing hallucination in LLM responses.

Solution: Designed strict prompt templates that constrain Gemini to only use retrieved context, with explicit instructions to acknowledge when information is not available.

Technical Skills Demonstrated

- Vector Database Management: Proficiency with Pinecone for high-dimensional vector storage and similarity search

- Natural Language Processing: Implementation of advanced NLP techniques using transformer models for semantic understanding

- RAG Architecture: Design and implementation of production-grade Retrieval-Augmented Generation systems

- Document Processing: Sophisticated PDF parsing and chunking strategies for optimal information retrieval

- API Integration: Seamless orchestration of multiple AI services (Pinecone, Google Gemini, HuggingFace models)

- Python Development: Clean, modular code with comprehensive error handling and progress monitoring

- AI/ML Engineering: Understanding of embeddings, vector spaces, and similarity metrics for semantic search

Future Enhancements

- Implement hybrid search combining vector similarity with keyword matching for improved precision

- Add support for real-time document updates with incremental indexing

- Integrate conversation memory for multi-turn dialogue with context retention

- Develop a web-based interface for easier accessibility and deployment

- Implement advanced analytics to track query patterns and optimize retrieval strategies

- Add support for multiple file formats (Word, Excel, HTML) beyond PDF

Demonstration & Access

- GitHub Repository: View complete source code & documentation

- Technical Documentation: Detailed setup and usage instructions

- Live Demo: Available upon request for technical evaluation

Thank You for Visiting My Portfolio

This AI-Powered RAG Knowledge Assistant demonstrates my expertise in building sophisticated AI systems that bridge the gap between static documentation and intelligent, interactive knowledge retrieval. By combining cutting-edge vector database technology with advanced language models, I've created a solution that transforms how organizations access and utilize their documentation.

The project showcases not just technical implementation skills, but also the ability to architect complex AI systems that deliver real business value through improved information accessibility and user experience. I'm passionate about leveraging AI to solve real-world problems and would be excited to discuss how these technologies can be applied to your organization's challenges.

For inquiries about this project or potential collaborations in AI/ML development, please reach out via the Contact section. I look forward to exploring how we can build intelligent solutions together.

Best regards,

Damilare Lekan Adekeye